Network Performance of Thread on Tock

posted 2025 October 18

1909 words // est. 10 min. read

tags: embedded, programming, networking, Thread

The Tock embedded operating system now has support for the Thread networking protocol. Being able to effortlessly connect a low-power embedded device to extant IPv6 infrastructure and "talk" to it like any other conventional computer makes it even easier to integrate such hardware into new and existing CPS and IoT applications. I have had the opportunity to leverage Thread on Tock for transmitting audio data across the network and was impressed that it was more than sufficient for my needs. To get a clear understanding of how well Thread on Tock works, I ran some basic network performance testing and measurements to discover the limits of its current implementation.

Setup

The testing setup consists of a single nRF52840dk (I will call it the "application device") that connects to the network through a single Thread border router, another Thread-capable device that acts as a gateway to rest of the IPv6 network. I am using a Raspberry Pi 4 paired a second nRF52840dk as the border router. The additional nRF52840dk allows the Rasbperry Pi to speak the underlying 802.15.4 protocol and communicate with the lone nRF52840dk, and, of course, the Raspberry Pi has both Wi-Fi and Ethernet to communicate with the rest of the computer network.

Here are the software details of the setup:

- The application device runs Tock OS revision d2816dd8.

- All testing applications running on the application device are in my fork of the

libtock-crepository.- The Thread networking stack actually compiles statically as a part of the application, so there is no separate revision to track for it.

- The Raspberry Pi 4 is running Ubuntu 24.04.

- The border router implementation is

ot-br-posixrevision 8fb9f940. - The radio co-processor is

ot-nrf528xxrevision 2d79ae5d.

- The border router implementation is

The physical positioning of the devices is favorable for wireless communication. There is approximately three feet of open space between the application device and the radio co-processor connected to the Raspberry Pi. This is an ideal placement that provides a baseline for future experiments. There are no other devices on this Thread network aside from the Raspberry Pi border router and the application device.

Benchmarking

I am focused on two metrics in particular:

- application throughput (i.e., goodput)

- and reliability.

In other words...

How quickly can we really push data between the application device and the border router? Although the 802.15.4 protocol allows up to 127 bytes in a single packet, by the time Thread and 802.15.4 finish taking their required overhead, only 79 free bytes remain per packet.

What kind of reliability can we expect from the 802.15.4 medium? In applications involving embedded devices, we tend to prefer straightforward, low-overhead ways of doing things. If one tried to networking over Thread without a reliable transport protocol (i.e., used UDP), how often would data go missing or corrupted?

Application throughput (goodput)

I first take a look at the goodput from the application device to the border router. This is the likely networking bottleneck in any communication between the application device and the other end, whether that other end is to a gateway, an edge server, or a cloud server. When I initially used Thread on Tock to transmit audio data from the application device to a computer on the local network, I was quite impressed with the data rates that the setup was achieving (albeit using an ideal configuration). My audio application took advantage of the largest packet size to transmit audio data, but I also wanted to ascertain the goodput for smaller packet sizes. Perhaps one needs to send low-latency sensor data from the Thread device and would like to know what is feasible for packets containing small amounts of sensor data.

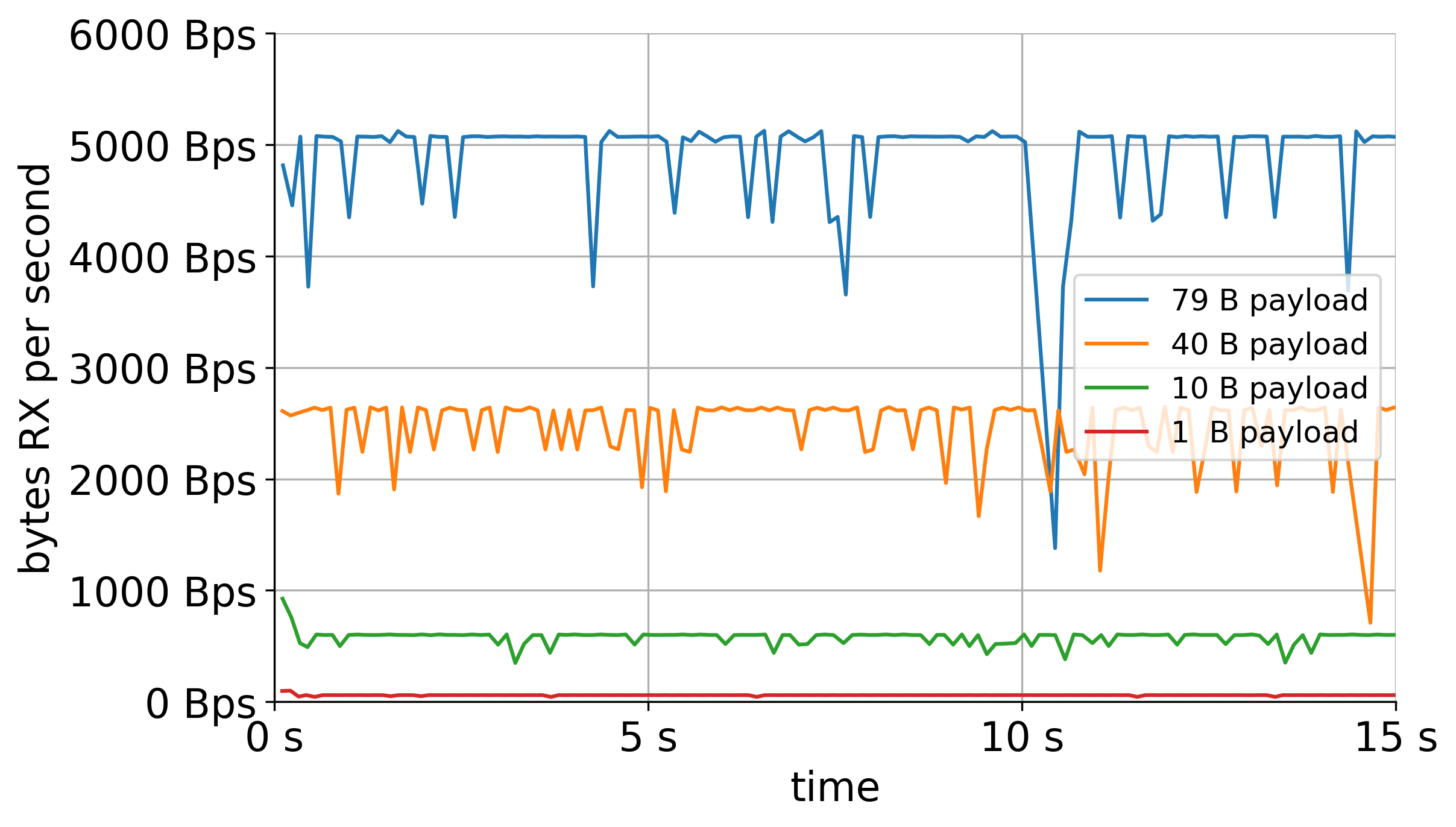

The first setup has the application device sending packets of a specific size as fast as possible in a loop to the border router. I did this with payloads of fixed size: 1 B, 10 B, 40 B, and 79 B (the payload size which brings the packet size to max at 127 B). By noting the incoming payload sizes on the border router, I obtained the following (mostly expected) results:

There is nothing too exciting here, but I was very happy to get 5 KiBps from the application device to the border router. However, there are many sudden drops in the goodput. Checking the logs would reveal that they correspond with these errors from the border router:

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:56, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq:yes

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:57, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes,

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:57, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq:yes

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:58, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes,

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:58, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq:yes

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:59, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes,

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:59, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq:yes

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:60, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes,

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:60, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq:yes

[I] Mac-----------: Frame rx failed, error:Security, len:52, seqnum:61, type:Data, src:6e8ef9052967c615, dst:4a73c036da29d88b, sec:yes, ackreq: yes

I have yet to do any deep dive into what causes this. These moments are short-lived (on the order of seconds) but definitely indicate that one should watch their packets and verify data closely if you care about the reliability of the data these devices transmit over Thread.

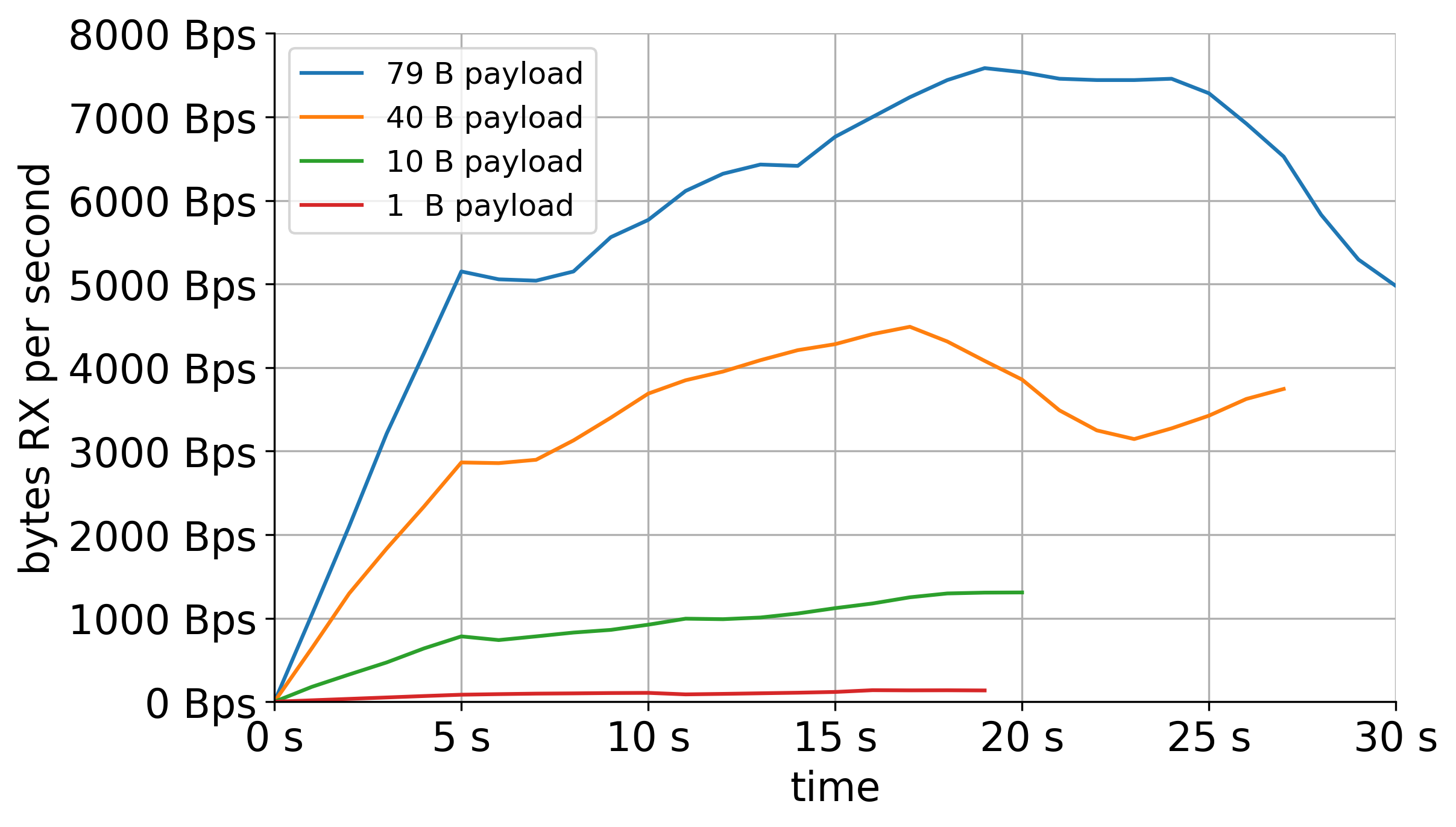

Next up is the goodput to the application device. This setup has the border router transmitting packets as quickly as possible to the application device. My initial intuition was that the border router, being a Raspberry Pi 4, would easily flood the application device with packets and cause trouble for the application device. However, I never encountered any runtime issues with the application device... The goodput measurements for data transmission from the border router tothe application device turned out as follows:

The results exposed some flaky behavior with the border router setup:

[W] P-RadioSpinel-: radio tx timeout

[C] P-RadioSpinel-: Failed to communicate with RCP - no response from RCP during initialization

[C] P-RadioSpinel-: This is not a bug and typically due a config error (wrong URL parameters) or bad RCP image:

[C] P-RadioSpinel-: - Make sure RCP is running the correct firmware

[C] P-RadioSpinel-: - Double check the config parameters passed as `RadioURL` input

[C] Platform------: HandleRcpTimeout() at radio_spinel.cpp:2035: RadioSpinelNoResponse

For these runs, I wanted to capture at least 30 seconds of data, but before I could reach 30 seconds most of the time, the RCP connected to the Raspberry Pi would suddenly fail. Summarily: sending packets too fast would crash the RCP. Therefore, most of the goodput measurements do not extend to a full 30 seconds. However, I think it still gives a good idea of what is possible.

The goodput from the border router to the application device seems more impressive than the other direction. Whereas the 79 B payload goodput from the application device to the border router reaches around 5 KiBps, the goodput for the other direction reaches around 7.5 KiBps. At that transmission rate, the packet queue becomes the limiting factor:

[N] MeshForwarder-: Dropping (dir queue full) IPv6 UDP msg, len:127, chksum:5681, ecn:no, sec:yes, prio:low

[N] MeshForwarder-: src:[fe80:0:0:0:404c:c223:cc7e:c8a7]:55528

[N] MeshForwarder-: dst:[fe80:0:0:0:600f:2c83:756b:ee41]:12122

[N] MeshForwarder-: Dropping (dir queue full) IPv6 UDP msg, len:127, chksum:5681, ecn:no, sec:yes, prio:low

[N] MeshForwarder-: src:[fe80:0:0:0:404c:c223:cc7e:c8a7]:55528

[N] MeshForwarder-: dst:[fe80:0:0:0:600f:2c83:756b:ee41]:12122

[N] MeshForwarder-: Dropping (dir queue full) IPv6 UDP msg, len:127, chksum:5681, ecn:no, sec:yes, prio:low

[N] MeshForwarder-: src:[fe80:0:0:0:404c:c223:cc7e:c8a7]:55528

[N] MeshForwarder-: dst:[fe80:0:0:0:600f:2c83:756b:ee41]:12122

[N] MeshForwarder-: Dropping (dir queue full) IPv6 UDP msg, len:127, chksum:5681, ecn:no, sec:yes, prio:low

[N] MeshForwarder-: src:[fe80:0:0:0:404c:c223:cc7e:c8a7]:55528

[N] MeshForwarder-: dst:[fe80:0:0:0:600f:2c83:756b:ee41]:12122

[N] MeshForwarder-: Dropping (dir queue full) IPv6 UDP msg, len:127, chksum:5681, ecn:no, sec:yes, prio:low

[N] MeshForwarder-: src:[fe80:0:0:0:404c:c223:cc7e:c8a7]:55528

[N] MeshForwarder-: dst:[fe80:0:0:0:600f:2c83:756b:ee41]:12122

Reliability

For very simple, low packet rate setups, I have found that using UDP over Thread to be generally consistently reliable. However, when I began sending audio data over thread, a process that involves sending at least around 100 packets, I quickly noticed that packet drops and data corruption were the reality for applications on Thread. I rigged together a simple scheme to get all the audio over to the border router, but the audio application itself is beyond the scope of the topic here.

The question to answer here is: how reliable is Thread when sending data that must be divided into multiple payloads? With respect to the audio application, I wanted to send a couple of seconds of audio and would divide the data into multiple packets myself (I'll discuss letting Thread/IPv6 do the fragmentation next).

For this experiment, I crafted and sent 100 127-byte packets from the application device to the border router. The application receiving the packets on the border router would simply count the number of packets that it received for the experiment run. Over the course of ten trials, the application successfully received 82.3% of those packets.

Which is pretty bad.

What is worth noting is that it seemed to mostly be the latter packets that the application never received. Each individual packet had a counter value in it so that I could keep track of its ordering (and, yes, I am assuming that the byte containing the counter did not get corrupted). The application device sent these packets in rapid succession, so perhaps the border router, or even the RCP, is dropping packets due to having a full queue somewhere.

Still, this result is better than being lazy and letting OpenThread itself fragment your data...

Packet fragmentation

While 802.15.4 has a maximum packet size of 127 bytes, OpenThread will accept payloads that exceed that limit. OpenThread will fragment the payload and send multiple packets (according to the 6LoWPAN protocol). So, from the application developer's perspective, there should not be a difference between a caller meticulously sizing packets and OpenThread fragmenting an oversized payload, right? Wrong. If a single fragment packet of is lost, the entire oversized payload is lost. While the application layer may be able to make use of the portion of the data that arrived successfully, IPv6 will not pass it to the application and drop the packet.

This behavior further contributes to unreliable packet delivery. For example, if a single packet has a 1% chance of being lost, a payload fragmented across two packets has a:

1 - (.99 × .99) = .0199 = 1.99% chance of being lost.

(Assuming the events are independent).

This gets worse as the number of fragments for the payload increases. A payload fragmented across five packets has a:

1 - (.99 ^ 5) = 0.049 = 4.9% chance of being lost.

So, if you care about receiving even a part of your data on the other end (which one really may not, that is valid), sizing your packets correctly---or just switching to a reliable protocol---would be preferable.

What's next?

Overall, these were just some quick-and-dirty experiments that I ran to satisfy my curiosity about what is possible on Thread, and particularly Thread on Tock, right now. Sending (relatively) large amounts of data is just one particular use case, and I did not have the simpler use case of sending up to a few bytes of sensor data in mind. My intuition is that Thread would work better in that scenario as far as reliability goes.

What I have yet to test here is how this all works in larger, stranger network topologies with Thread. Thread is a mesh networking protocol, so being able to directly send data to a border router is an ideal setup. What happens to that goodput and reliability once you have multiple devices meshed together handling not just their own data, but the data of other devices? Getting the setup for such an experiment would be quite a complex undertaking but is, nonetheless, and interesting experiment to explore. Are there more ideal node configurations depending on how often devices send data and how much data they send?

I think the simpler and more likely reality is that I will refine this experiment setup, recollect the data from this post, and then run the same experiment to get data for Thread on the Zephyr OS. Perhaps the implementation of Thread on Tock imposes a measureable performance penalty compared to the implementation of Thread on Zephyr. Even in its current state, though, Thread on Tock is a wonderful addition to the embedded OS's capabilities.